NGINX Reverse Proxy Configuration and Troubleshooting

The difference between a forward proxy server and a reverse proxy server

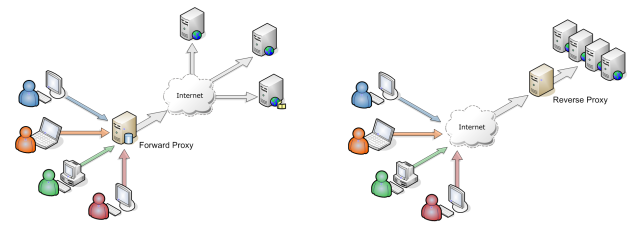

A common question is what’s the difference between a proxy server and a reverse proxy server?

An ordinary forward proxy is an intermediate server that sits between the client and the origin server. In order to get content from the origin server, the client sends a request to the proxy naming the origin server as the target. The proxy then requests the content from the origin server and returns it to the client. The client must be specially configured to use the forward proxy to access other sites.

A typical usage of a forward proxy is to provide Internet access to internal clients that are otherwise restricted by a firewall. The forward proxy can also use caching to reduce network usage.

A reverse proxy (or gateway), by contrast, appears to the client just like an ordinary web server. No special configuration on the client is necessary. The client makes ordinary requests for content in the namespace of the reverse proxy. The reverse proxy then decides where to send those requests and returns the content as if it were itself the origin.

A typical usage of a reverse proxy is to provide Internet users access to a server that is behind a firewall. Reverse proxies can also be used to balance load among several back-end servers or to provide caching for a slower back-end server. In addition, reverse proxies can be used simply to bring several servers into the same URL space.

Test Configuration File Syntax

After making change to nginx configuration file nginx.conf

and any included configuration files like site specific configuration,

It is useful to use nginx -t to test configuration syntax and validation before apply it.

$ nginx -t

nginx: [alert] could not open error log file: open() "/var/log/nginx/error.log" failed (13: Permission denied)

nginx: configuration file /etc/nginx/nginx.conf test failed

You may need sudo:

$ sudo nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

Another useful parameter is -T which will test configuration and dump it to console.

$ sudo nginx -T

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

# configuration file /etc/nginx/nginx.conf:

user www-data;

worker_processes auto;

pid /run/nginx.pid;

events {

worker_connections 768;

# multi_accept on;

}

http {

##

# Basic Settings

##

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

# server_tokens off;

# server_names_hash_bucket_size 64;

# server_name_in_redirect off;

include /etc/nginx/mime.types;

default_type application/octet-stream;

##

# SSL Settings

##

ssl_protocols TLSv1 TLSv1.1 TLSv1.2; # Dropping SSLv3, ref: POODLE

ssl_prefer_server_ciphers on;

...

...

...

Reload NGINX without restart server

Use -s parameter you can send signal to NGINX process.

When you changed configuration file, you can send reload to reload server without restart server.

$ sudo nginx -s reload

All available signals: stop, quit, reopen, reload

-s signal: send signal to a master process: stop, quit, reopen, reload

Turn off server signature

Want to hide Nginx version in error page.

Instead of show nginx/1.16.2, only show nginx.

HTTP response header also hide nginx version:

$ curl -I localhost:80

HTTP/1.1 200 OK

Server: nginx

...

Solution

In ubuntu install nginx-extras:

$ sudo apt-get install nginx-extras -y

Go to /etc/nginx/nginx.conf and under http add:

http {

more_set_headers "Server: foo";

server_tokens off;

}

Reload nginx

$ sudo service nginx reload

Add suffix / or not

You can change proxied URL path, for example when NGINX received an incoming request with path /jenkins/job/, you can forward as /job/ to original server.

Beware add or not add suffix slash (/) during config proxy_pass.

server {

listen 2020

location /some/path {

proxy_pass http://127.0.0.1:3000;

}

}

With this config, http://127.0.0.1:2020/some/path/foo/bar will be rewrote as http://127.0.0.1:3000/some/path/foo/bar in original server.

server {

listen 2020

location /some/path {

proxy_pass http://127.0.0.1:3000/another;

}

}

With this config, http://127.0.0.1:2020/some/path/foo/bar will be rewrote as http://127.0.0.1:3000/another/foo/bar in original server.

server {

listen 2020

location /some/path/ {

proxy_pass http://127.0.0.1:3000/another;

}

}

With this config, http://127.0.0.1:2020/some/path/foo/bar will be rewrote as http://127.0.0.1:3000/anotherfoo/bar in original server.

NOTE: There is no / after /another.

server {

listen 2020

location /some/path/ {

proxy_pass http://127.0.0.1:3000/another/;

}

}

With this config, http://127.0.0.1:2020/some/path/foo/bar will be rewrote as http://127.0.0.1:3000/some/path/foo/bar in original server.

Keep-alive not working with proxy_pass

Symptom

proxy_pass

does not support keep-alive event server response Connection: keep-alive header like below:

HTTP/1.1 200 OK

Accept-Ranges: bytes

Content-Length: 31746

Content-Type: text/html; charset=utf-8

Connection: keep-alive

Date: Wed, 05 Feb 2020 21:32:51 GMT

Try to add following Connection: keep-alive in proxy also does not work:

proxy_set_header Connection "keep-alive"

Root Cause

keep-alive should enable in upstream block, not direct proxy_pass

.

Keep-alive also require proxy use http version 1.1.

Solution

- Use

upstreaminstead of directproxy_pass. - use http version 1.1

- origin server should have keep-alive enabled.

Here is a configuration sample:

upstream hugo {

server 127.0.0.1:1313;

keepalive 60;

}

location / {

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_pass http://hugo;

}

504 Gateway Timeout

Symptom

Web page takes long time to connect and eventually show 504 Gateway Timeout.

Solution

The original server may slow to response, try to increase timeout:

proxy_connect_timeout 120;

proxy_send_timeout 120;

proxy_read_timeout 120;

send_timeout 120;

502 Bad Gateway - sslv3 alert handshake failure

Symptom

nginx config:

location /echo4/ {

proxy_pass https://example-upstream.com/echo4/;

}

502 Bad Gateway error:

$ curl -i localhost:2020/echo4/

HTTP/1.1 502 Bad Gateway

Server: nginx/1.17.9

Date: Thu, 12 Mar 2020 03:27:03 GMT

Content-Type: text/html

Content-Length: 157

Connection: keep-alive

<html>

<head><title>502 Bad Gateway</title></head>

<body>

<center><h1>502 Bad Gateway</h1></center>

<hr><center>nginx/1.17.9</center>

</body>

</html>

If NGINX report 502 error, first check NGINX error.log.

For example I got a 502 bad gateway error and have an error log as following:

2020/03/11 17:02:03 [error] 57041#0: *19 SSL_do_handshake() failed

(SSL: error:14094410:SSL routines:ssl3_read_bytes:sslv3 alert handshake failure:SSL alert number 40)

while SSL handshaking to upstream,

client: 127.0.0.1,

server: localhost,

request: "GET /echo4/ HTTP/1.1",

upstream: "https://14.18.7.220:443/echo4/",

host: "localhost:2020"

This tells me the SSL connection to upstream hand shake failed.

Test it with openssl should see similar error:

$ openssl s_client -connect example-upstream.com:443

CONNECTED(00000006)

4504165868:error:14004410:SSL routines:CONNECT_CR_SRVR_HELLO:

sslv3 alert handshake failure:

/BuildRoot/Library/Caches/com.apple.xbs/Sources/libressl/libressl-47.11.1/libressl-2.8/ssl/ssl_pkt.c:1200:

SSL alert number 40

4504165868:error:140040E5:SSL routines:CONNECT_CR_SRVR_HELLO:

ssl handshake failure:

/BuildRoot/Library/Caches/com.apple.xbs/Sources/libressl/libressl-47.11.1/libressl-2.8/ssl/ssl_pkt.c:585:

---

no peer certificate available

---

No client certificate CA names sent

---

SSL handshake has read 7 bytes and written 0 bytes

---

New, (NONE), Cipher is (NONE)

Secure Renegotiation IS NOT supported

Compression: NONE

Expansion: NONE

No ALPN negotiated

SSL-Session:

Protocol : TLSv1.2

Cipher : 0000

Session-ID:

Session-ID-ctx:

Master-Key:

Start Time: 1583973354

Timeout : 7200 (sec)

Verify return code: 0 (ok)

---

$ openssl version

LibreSSL 2.8.3

Use brew provided openssl does not have this issue:

$ /usr/local/Cellar/openssl\@1.1/1.1.1d/bin/openssl s_client -connect example-upstream.com:443

CONNECTED(00000006)

depth=2 C = IE, O = Baltimore, OU = CyberTrust, CN = Baltimore CyberTrust Root

verify return:1

depth=1 C = US, ST = CA, L = San Francisco, O = "CloudFlare, Inc.", CN = CloudFlare Inc ECC CA-2

verify return:1

depth=0 C = US, ST = CA, L = San Francisco, O = "Cloudflare, Inc.", CN = sni.cloudflaressl.com

verify return:1

---

Certificate chain

0 s:C = US, ST = CA, L = San Francisco, O = "Cloudflare, Inc.", CN = sni.cloudflaressl.com

i:C = US, ST = CA, L = San Francisco, O = "CloudFlare, Inc.", CN = CloudFlare Inc ECC CA-2

1 s:C = US, ST = CA, L = San Francisco, O = "CloudFlare, Inc.", CN = CloudFlare Inc ECC CA-2

i:C = IE, O = Baltimore, OU = CyberTrust, CN = Baltimore CyberTrust Root

---

Server certificate

-----BEGIN CERTIFICATE-----

MIIE4zCCBImgAwIBAgIQD...

I run into this issue with a Cloudflare upstream server.

The root cause is the default Mac OS openssl does not support TLS 1.3 properly.

Solution

To solve this issue in nginx, need re-install nginx (you may need re-compile) with openssl library (Not LibreSSL provided by Mac OS).

504 Gateway Time-out - upstream timed out

Symptom

2020/10/06 18:58:09 [error] 500#500: *1418 upstream timed out (110: Connection timed out) while connecting to upstream, client: 1.2.3.4, server: foo.example.com, request: "GET / HTTP/1.1", upstream: "http://[::1]:2020/", host: "foo.example.com"

The config like below:

location / {

proxy_pass http://localhost:2020/;

}

Solution

The config use localhost caused resolve to IPv6 address ::1 and server not listen on IPv6.

To avoid this issue, use 127.0.0.1 instead of localhost in proxy_pass:

location / {

proxy_pass http://127.0.0.1:2020/;

}

502 Bad Gateway - Too large header

Symptom

too big header error in NGINX error.log:

upstream sent too big header while reading response header from upstream

You can use curl -I to fetch the response headers only, you may see very large header.

For example, it may include lots Cookie caused too large header:

$ curl -I localhost:3000/logout

HTTP/1.1 302 Found

Location: http://localhost:3000/

Content-Type: text/html; charset=utf-8

X-Ua-Compatible: IE=Edge

Cache-Control: no-cache

X-Request-Id: fe41f23970ed792163b49210790a1bc5

X-Runtime: 0.002031

Vary: Origin

X-Rack-Cors: miss; no-origin

Server: WEBrick/1.3.1 (Ruby/2.0.0/2015-12-16)

Date: Thu, 06 Feb 2020 03:19:03 GMT

Content-Length: 88

Connection: Keep-Alive

Set-Cookie: xsrf-token=xfyj%2FGhBGNwbfoet2VPnKTO7BWnkqAS1jY3wr%2BV9cSQ%3D; path=/

Set-Cookie: session=Vom40W3EDxuOWNWrVQ9msOYUvnwM42Eez9AvaMAtj69lbnXPwM7AGCihD40MF3zrVV/58bvj4bIU7R5NtkJPeFIx1JjJ8lI3df9uij8VvuNd4UhvNs8tOM13P4Lsl2KbYz9WY9o4ZIwpkhq+AI641jU4gKGHhHkuiYwwGioYFhLKx3/Fsn+tEEv8cgE4lYoF+G2tFJg3cL05/SVkM52; path=/; HttpOnly

Set-Cookie: lang=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: ae=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: cv=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: es=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: event69=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: mbox=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: promocode=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: s_cc=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: s_fid=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: s_gpid=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: s_nb=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: provider_id=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: provider_name=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: user_id=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: api_token=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: shost=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: app_version=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: user_token=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: dashboard=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: tags=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: option_1=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: option_2=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: option_3=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: option_4=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: option_5=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: option_6=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_1=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_2=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_3=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_4=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_5=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_6=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_7=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Set-Cookie: memo_4s_8=; path=/; expires=Thu, 01-Jan-1970 00:00:00 GMT

Solution

Increase proxy_buffer_size

under server:

proxy_buffer_size 8k;

proxy_buffers 16 8k;

Note: Only update proxy_buffer_size may not work,

when you run nginx -t to test the configuration,

it may report following error:

$ sudo nginx -t

nginx: [emerg] "proxy_busy_buffers_size" must be less than the size of all "proxy_buffers" minus one buffer in /etc/nginx/nginx.conf:68

nginx: configuration file /etc/nginx/nginx.conf test failed

In this case, you need also increase proxy_buffers

.

Original server redirect missing port number in URL

Symptom

When original server response a redirect use Location header,

the redirect URL missing proxy server port number.

Root cause

It due to request to original server do not have correct Host header.

For example, Host may wrongly set to $host

:

proxy_set_header Host $host

Solution

Set Host header value to $proxy_host

to include server name and port number:

proxy_set_header Host $proxy_host

Pass through https

In normal reverse proxy configuration, NGINX act as a TLS terminator, it will not pass TLS connection to original server. In some scenario want to use NGINX pass through https traffic to original server, for example original server can verify the client’s TSL certificate before setup TLS connection.

In this case, we need NGINX run as a load balance to pass through traffic.

Use top level of stream block to config virtual server

stream {

server {

listen 443;

proxy_pass original.example.com:443;

}

}

NOTE: NGINX as a load balance, it can pass through any TCP and UDP traffic, not necessary HTTP traffic. For example, you can also pass through MySQL to

stream {

server {

listen 3306;

proxy_pass mysql-server.example.com:3306;

}

}

Example of set an UDP load balance for DNS server, you need add udp after port in listen directive:

stream {

upstream dns_servers {

least_conn;

server 192.168.136.130:53;

server 192.168.136.131:53;

server 192.168.136.132:53;

}

server {

listen 53 udp;

proxy_pass dns_servers;

}

}

A reference of reverse proxy server configuration

server {

listen 2020;

server_name example.djangocas.dev;

proxy_connect_timeout 120;

proxy_send_timeout 120;

proxy_read_timeout 120;

send_timeout 120;

proxy_buffer_size 8k;

proxy_buffers 16 8k;

proxy_http_version 1.1;

proxy_set_header Host $proxy_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

location /api/ {

proxy_pass https://example.com/demo/api/;

}

location / {

proxy_pass http://127.0.0.1:1313;

}

access_log /var/log/nginx/example-site/access.log;

error_log /var/log/nginx/example-site/error.log;

}

NGINX Directives Reference

proxy_pass

Syntax: proxy_pass URL;

Default: —

Context: location, if in location, limit_except

Sets the protocol and address of a proxied server and an optional URI to which a location should be mapped. As a protocol, “http” or “https” can be specified. The address can be specified as a domain name or IP address, and an optional port:

proxy_pass http://127.0.0.1:8000/uri/;

or as a UNIX-domain socket path specified after the word “unix” and enclosed in colons:

proxy_pass http://unix:/tmp/backend.socket:/uri/;

If a domain name resolves to several addresses, all of them will be used in a round-robin fashion. In addition, an address can be specified as a server group.

Parameter value can contain variables. In this case, if an address is specified as a domain name, the name is searched among the described server groups, and, if not found, is determined using a resolver.

A request URI is passed to the server as follows:

If the proxy_pass directive is specified with a URI, then when a request is passed to the server, the part of a normalized request URI matching the location is replaced by a URI specified in the directive:

location /name/ {

proxy_pass http://127.0.0.1/remote/;

}

If proxy_pass is specified without a URI, the request URI is passed to the server in the same form as sent by a client when the original request is processed, or the full normalized request URI is passed when processing the changed URI:

location /some/path/ {

proxy_pass http://127.0.0.1;

}

Before version 1.1.12, if proxy_pass is specified without a URI, the original request URI might be passed instead of the changed URI in some cases. In some cases, the part of a request URI to be replaced cannot be determined:

When location is specified using a regular expression, and also inside named locations. In these cases, proxy_pass should be specified without a URI.

When the URI is changed inside a proxied location using the rewrite directive, and this same configuration will be used to process a request (break):

location /name/ {

rewrite /name/([^/]+) /users?name=$1 break;

proxy_pass http://127.0.0.1;

}

In this case, the URI specified in the directive is ignored and the full changed request URI is passed to the server.

When variables are used in proxy_pass:

location /name/ {

proxy_pass http://127.0.0.1$request_uri;

}

In this case, if URI is specified in the directive, it is passed to the server as is, replacing the original request URI. WebSocket proxying requires special configuration and is supported since version 1.3.13.

proxy_buffer_size

Syntax: proxy_buffer_size size;

Default: proxy_buffer_size 4k|8k;

Context: http, server, location

Sets the size of the buffer used for reading the first part of the response received from the proxied server. This part usually contains a small response header. By default, the buffer size is equal to one memory page. This is either 4K or 8K, depending on a platform. It can be made smaller, however.

proxy_buffers

Syntax: proxy_buffers number size;

Default: proxy_buffers 8 4k|8k;

Context: http, server, location

Sets the number and size of the buffers used for reading a response from the proxied server, for a single connection. By default, the buffer size is equal to one memory page. This is either 4K or 8K, depending on a platform.

proxy_busy_buffers_size

Syntax: proxy_busy_buffers_size size;

Default: proxy_busy_buffers_size 8k|16k;

Context: http, server, location

When buffering of responses from the proxied server is enabled, limits the total size of buffers that can be busy sending a response to the client while the response is not yet fully read. In the meantime, the rest of the buffers can be used for reading the response and, if needed, buffering part of the response to a temporary file. By default, size is limited by the size of two buffers set by the proxy_buffer_size and proxy_buffers directives.

proxy_http_version

Syntax: proxy_http_version 1.0 | 1.1;

Default: proxy_http_version 1.0;

Context: http, server, location

This directive appeared in version 1.1.4.

Sets the HTTP protocol version for proxying. By default, version 1.0 is used. Version 1.1 is recommended for use with keepalive connections and NTLM authentication.

proxy_set_header

Syntax: proxy_set_header field value;

Default: proxy_set_header Host $proxy_host;

proxy_set_header Connection close;

Context: http, server, location

Allows redefining or appending fields to the request header passed to the proxied server.

The value can contain text, variables, and their combinations.

These directives are inherited from the previous level if and only if

there are no proxy_set_header directives defined on the current level.

By default, only two fields are redefined:

proxy_set_header Host $proxy_host;

proxy_set_header Connection close;

If caching is enabled, the header fields “If-Modified-Since”, “If-Unmodified-Since”, “If-None-Match”, “If-Match”, “Range”, and “If-Range” from the original request are not passed to the proxied server.

An unchanged “Host” request header field can be passed like this:

proxy_set_header Host $http_host;

However, if this field is not present in a client request header then nothing will be passed. In such a case it is better to use the $host variable - its value equals the server name in the “Host” request header field or the primary server name if this field is not present:

proxy_set_header Host $host;

In addition, the server name can be passed together with the port of the proxied server:

proxy_set_header Host $host:$proxy_port;

If the value of a header field is an empty string then this field will not be passed to a proxied server:

proxy_set_header Accept-Encoding "";

NIGNX Embedded Variables

Embedded variables that can be used to compose headers using the proxy_set_header directive.

$host

In this order of precedence: host name from the request line, or host name from the “Host” request header field, or the server name matching a request.

It does not include port number.

e.g. In request curl 'http://localhost:2020/abc', the $host is localhost.

$remote_addr

client address. e.g. 1.2.3.4.

$remote_port

client port. e.g. 24510.

$server_name

name of the server which accepted a request.

$server_port

port of the server which accepted a request.

e.g. In request curl 'http://localhost:2020/abc', the $server_port is 2020.

$proxy_host

name and port of a proxied server as specified in the proxy_pass directive.

e.g. In following config, $proxy_host is example.com:2019.

location /path1/ {

proxy_pass http://example.com:2019/another/;

}

$proxy_port

port of a proxied server as specified in the proxy_pass directive,

or the protocol’s default port;

$proxy_add_x_forwarded_for

the “X-Forwarded-For” client request header field with the $remote_addr variable appended to it, separated by a comma. If the “X-Forwarded-For” field is not present in the client request header, the $proxy_add_x_forwarded_for variable is equal to the $remote_addr variable.

Reference

- NGINX Reverse Proxy

- NGINX: HTTP Keepalive Connections and Web Performance

- NGINX: Module ngx_http_proxy_module

- NGINX: Module ngx_http_core_module

- NGINX: TCP and UDP Load Balancing

OmniLock - Block / Hide App on iOS

Block distractive apps from appearing on the Home Screen and App Library, enhance your focus and reduce screen time.

DNS Firewall for iOS and Mac OS

Encrypted your DNS to protect your privacy and firewall to block phishing, malicious domains, block ads in all browsers and apps